How LLM Guardrails Reduce AI Risk in Software Development

Andrew ParkEditorial Lead

Andrew ParkEditorial Lead

Heavybit

How LLM Guardrails Minimize the Risks of AI in Software Development

Integrating Language Learning Models (LLMs) into software development is revolutionizing AI capabilities. However, it also raises significant ethical and security concerns.

LLM guardrails are one tool for ensuring responsible AI usage and fostering trust among stakeholders and the public. In this guide, we’ll delve into the critical role of LLM guardrails, exploring their implementation strategies, benefits, and challenges while highlighting how they are shaping a responsible AI landscape and reducing risks.

Learn more about the mechanics of ML models in this full guide to AI inference.

What are the Risks of AI in Software Development?

When using LLMs, there are several risks that aren’t necessarily present in rule-based AI systems. It’s important to weigh risks against your LLM’s potential capabilities.

Bias and Fairness

LLMs can inherit any biases present in their training data, leading to the generation of inaccurate or unfair content. This can even perpetuate societal biases and inequalities, leading to discriminatory outcomes.

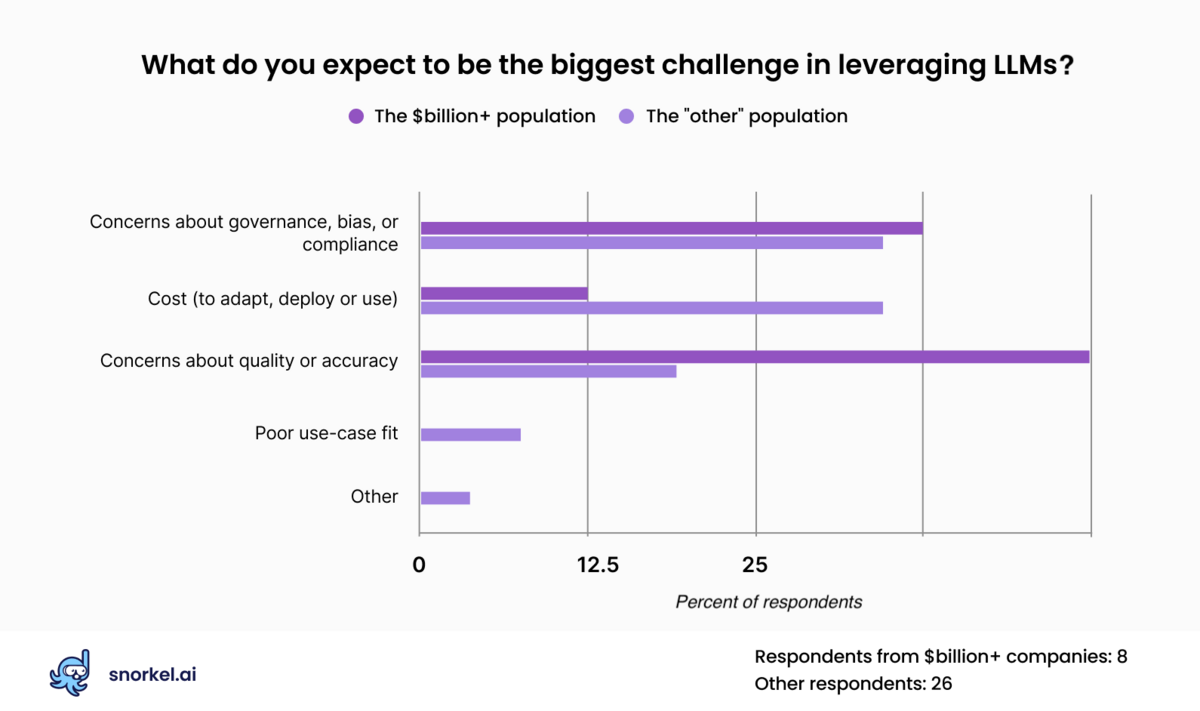

Bias and accuracy are key concerns for those working with LLMs. Image courtesy snorkel.

Legal and Regulatory Compliance

AI systems must comply with laws and regulations, such as data protection laws (like GDPR), intellectual property rights, and industry-specific regulations. Non-compliance can often lead to legal consequences and reputational damage.

Even with existing regulations in place, there is public concern over the lack of regulation regarding generative AI in software development, especially in open source.

Privacy Risks

LLMs may inadvertently disclose sensitive or private information if not properly trained or deployed. Privacy risks can arise from AI systems' collection, storage, and processing of personal data.

Security Vulnerabilities

AI models are susceptible to attacks by malicious actors, which may involve manipulated input data to deceive the model and produce incorrect outputs. Security vulnerabilities in AI systems can also be exploited to gain unauthorized access or cause data breaches.

What are LLM Guardrails?

LLM guardrails are a set of predefined protocols, rules, and limitations built to to govern the behavior and outputs of generative AI systems. They’re implemented during LLMOps development. What is LLMOps? LLMOps, or LLM Operations, comprises the techniques and tools that form the framework for managing LLMs in production environments.

As such, guardrails act as safety mechanisms, ensuring that AI software meets ethical standards. Guardrails provide guidelines and boundaries that enable organizations to mitigate the risks of generative AI in software development, while still utilizing it to its full potential.

For example, generative AI models may lack a nuanced contextual understanding for a specific use case. As a result, responses may be irrelevant or even potentially harmful. LLM guardrails that enhance contextual understanding improve a model's ability to return safe, effective responses.

Also, the rapidly changing generative AI landscape demands that LLMs adapt to change over time. They must allow for updates and refinements that reflect the changing needs of their users, and society as a whole.

And finally, the responses returned by LLMs must stay within the acceptable limits defined by the organization using them. Otherwise, they risk breaching ethical guidelines or even falling into legal pitfalls. LLM guardrails can assist with policy enforcement to ensure better results.

Guardrails are becoming increasingly important as developers turn to generative AI to help with various tasks throughout the software development lifecycle to save time and money.

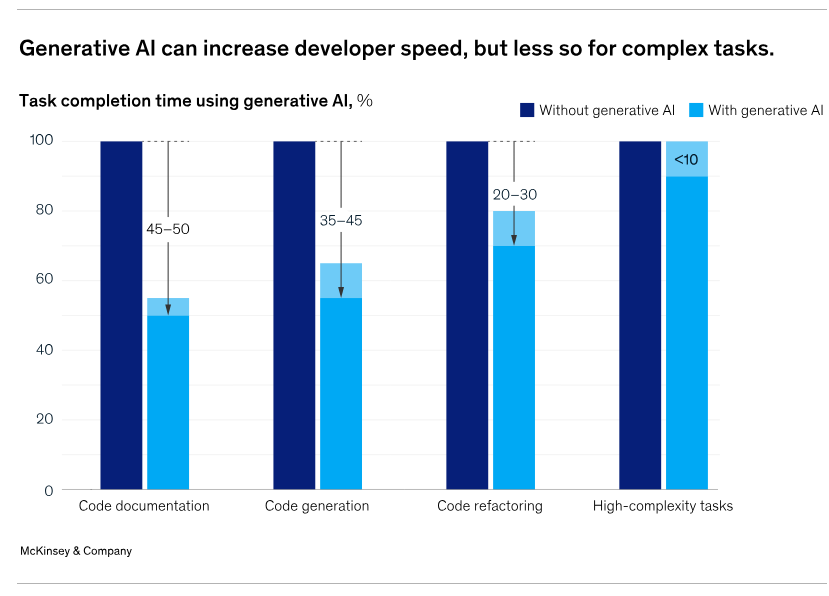

Generative AI’s impact on development processes. Image courtesy McKinsey.

Types of LLM Guardrails

There are five prominent categories of LLM guardrails you should be aware of.

Adaptive Guardrails

Adaptive guardrails evolve alongside a model, ensuring ongoing compliance with legal and ethical standards as the LLM learns and adapts.

Compliance Guardrails

Compliance guardrails ensure the outputs generated by the LLM align with legal standards, including data protection and user privacy. They’re frequently used in industries where regulatory compliance is critical, such as healthcare, finance, and legal services.

Contextual Guardrails

Contextual guardrails help fine-tune the LLM’s understanding of what is relevant and acceptable for its specific use case. They help prevent the generation of inappropriate, harmful, or illegal text.

Ethical Guardrails

Ethical guardrails impose limitations designed to prevent biased, discriminatory, or harmful outputs and ensure that an LLM complies with accepted moral and social norms.

Security Guardrails

Security guardrails are designed to protect against external and internal security threats. They aim to ensure the model can't be manipulated to spread misinformation or disclose sensitive information.

How do LLM Guardrails Minimize the Risks of AI in Software Development?

AI is increasingly being used in software development worldwide–finding applications in everything from generating code to customer service chatbots in omnichannel contact center solutions, and even data analytics tools.

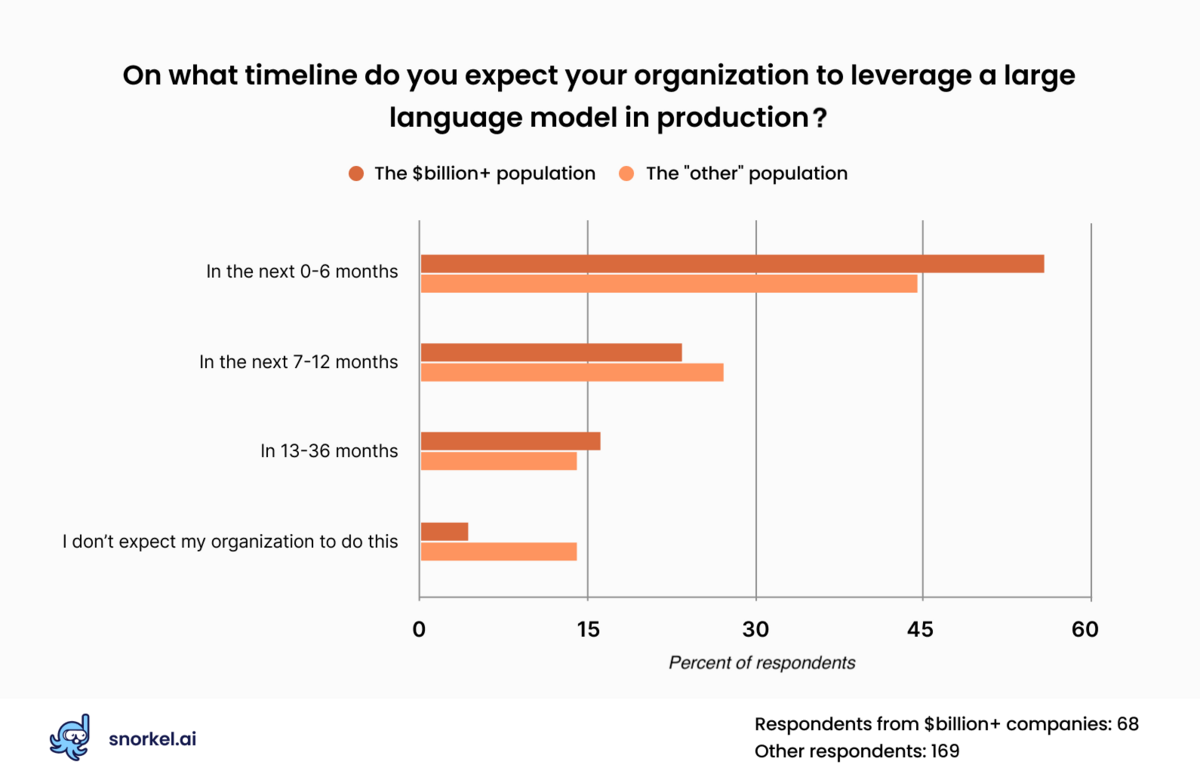

Many organizations plan to implement a LLM into production soon. Image courtesy snorkel.

Using LLM guardrails can help to mitigate the risks of AI in software development, saving organizations time and money. Here’s how:

Explainability

Guardrails promote explainability and transparency by requiring AI systems to provide clear explanations of their decisions and behaviors. This enhances trust among users and allows developers to understand how the AI works and why it produces certain outputs.

If incorrect or harmful outputs are being generated, developers have a better chance of rectifying the underlying issue.

Legal Compliance

Various relevant laws and regulations affect the use of AI systems. For instance, data protection laws such as GDPR and intellectual property rights are both key areas of concern for LLMs involved in software development.

Guardrails ensure that AI systems comply with these laws and regulations at every stage of development. They put in place a framework that ensures legal considerations are taken into account everywhere that LLMs are implemented.

Privacy Preservation

LLM guardrails can help protect user privacy by introducing data anonymization, minimization, and encryption techniques. They can also regulate access to sensitive information, ensuring that AI systems only use data for authorized purposes.

Ethical Considerations

LLM guardrails often include checks to ensure that AI systems developed adhere to ethical guidelines and principles. These might involve preventing biases, avoiding discrimination, and blocking harmful content generation.

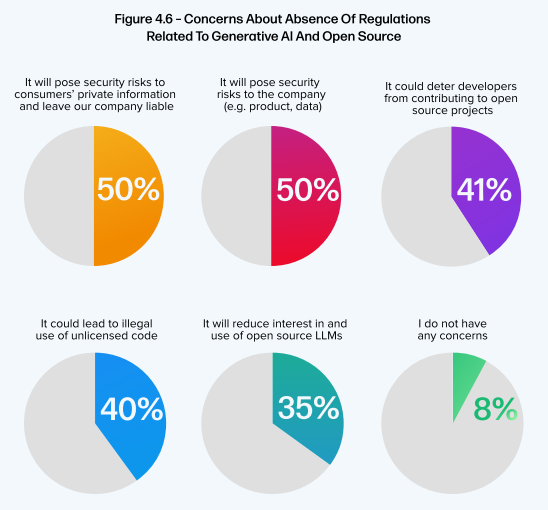

Industry standards and self-regulation are essential interim solutions for addressing the unique challenges posed by LLMs while specific legal frameworks are developed. The absence of these frameworks raises many concerns for developers.

Developers see security and privacy as top regulatory risks for GenAI. Image courtesy sonatype.

Safety and Security

LLM guardrails help to improve the safety and security of AI systems during software development. Measures can be implemented to detect and prevent potential vulnerabilities, security breaches, and malicious use cases.

Guardrails promote secure software development practices throughout the AI development lifecycle. This includes adhering to secure coding standards, conducting regular security reviews, and integrating security testing into the development process.

Robustness and Reliability

Guardrails help to ensure that AI systems are more robust and reliable. This improves their performance under various conditions, including edge cases, different input data distributions and environmental changes.

To achieve this, guardrails often demand that rigorous testing, validation, and monitoring are required throughout the development lifecycle.

Accountability and Governance

Clear accountability frameworks and governance mechanisms can be established for AI systems using LLM guardrails. This includes guidelines for responsible AI development, deployment, and monitoring.

In this way, guardrails can ensure that developers are held accountable for the behavior and impacts of their AI systems.

Fairness and Equity

LLM guardrails promote fairness and equity by identifying and mitigating biases in AI systems, ensuring that they treat all users fairly and do not perpetuate or amplify existing inequalities.

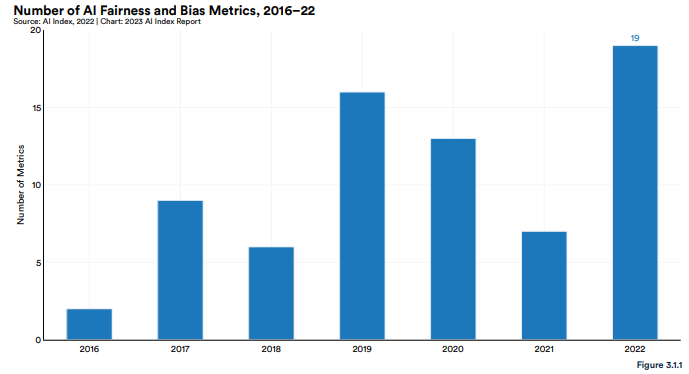

Fairness and bias metrics and evaluation criteria are often implemented to assess the performance of LLMs in this regard.

AI bias criteria continue to grow over time. Image courtesy Stanford.

Techniques such as bias audits, fairness-aware training, and dataset balancing help identify and address biases to ensure that the model's outputs are equitable across different demographic groups.

Implementation Strategies for LLM Guardrails

Implementing LLM guardrails effectively requires a combination of technical and ethical strategies:

- Technical Measures: The training process for AI models should include pre-processing inputs, post-processing outputs, and embedded ethical considerations.

- Continuous Monitoring: LLMs should regularly have their performance assessed to identify and rectify any issues that may arise. This is crucial both before and after deployment.

- Ethical Frameworks: Ethical guidelines should be developed to govern the design, development, and deployment of LLMs, ensuring that they adhere to legal and moral standards.

What are the Challenges of Implementing LLM Guardrails?

While implementing guardrails in LLMs brings numerous benefits, it’s not without its challenges.

Balance

When establishing LLM guardrails, decision-makers must ensure they put measures in place that are neither too strict nor too lenient. Going too far either way could result in guardrails which stifle the utility of LLMs, or leave them open to misuse.

Complexity of Language

Human language is incredibly nuanced and is constantly evolving. This makes it difficult to implement all-encompassing guardrails, especially when it comes to bias detection and content moderation.

Data Privacy

It can be incredibly difficult to ensure the anonymity and privacy of data within LLMs. The larger the datasets used to train the model, the harder this becomes.

What are the Benefits of Implementing LLM Guardrails?

Implementing LLM guardrails during software development may initially seem like an unnecessary step that will add further development time and accrue extra costs. However, several key benefits make it worth the extra investment.

Improving ROI

LLM Guardrails minimize risks that could lead to costly consequences, such as data breaches or harmful outputs being generated. They also help to maximize the potential of LLMs, allowing them to be deployed in a larger number of use cases to greater effect.

Maintaining Trust

Utilizing LLM guardrails helps to create transparency and builds trust among customers and stakeholders. This is essential for maintaining a strong position in an increasingly competitive landscape.

Upholding Ethics

LLM guardrails help ensure that developers working on generative AI adhere to legal compliances and moral standards, avoiding reputational damage.

Conclusion: LLM Guardrails Mean Less AI Risks in Software Development

LLM guardrails serve as indispensable tools for mitigating the risks of using AI in software development.

By implementing robust guardrails, organizations can navigate the complexities of AI development, such as bias, fairness, and privacy, while still upholding ethical standards and reducing security risks.

While challenges such as maintaining balance, addressing language complexity, and ensuring data privacy persist, LLM guardrails provide many valuable benefits in shaping a responsible AI landscape.

Content from the Library

The Role of Synthetic Data in AI/ML Programs in Software

Why Synthetic Data Matters for Software Running AI in production requires a great deal of data to feed to models. Reddit is now...

Open Source Ready Ep. #11, Unpacking MCP with Steve Manuel

In episode 11 of Open Source Ready, Brian Douglas and John McBride sit down with AI expert Steve Manuel to explore the Model...